How Do I Free Up Application Memory On My Mac

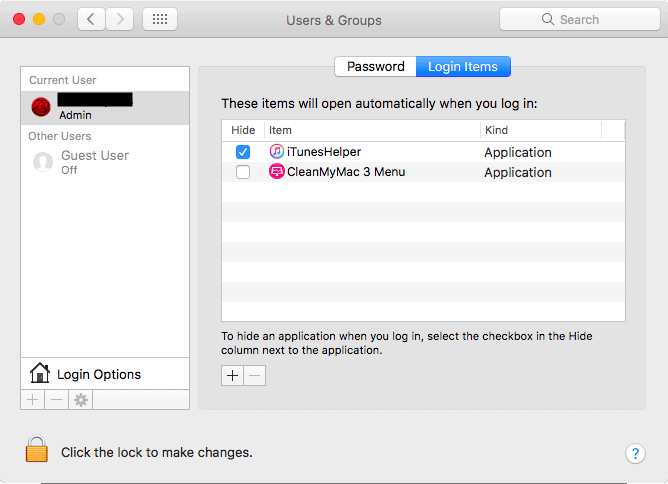

If won't help, Force Quitting the affected application should solve the problem. Alternatively check your memory pressure ( memorypressure command) and double check your free space so there is enough storage for swap files. Also try running sudo purge from the Terminal. Applications like Memory Clean can help you purge your RAM, some may even do it automatically. However, purging your memory may not always speed up your system and can in some cases slow it down. As you purge the RAM, you're causing applications that are open (even in the background) to re-create the data they had on the RAM. To run Memory Clean, click its icon in the menu bar. A window will appear to show dynamic figures of your Mac's current active, wired, inactive, and free memory. Click the Clean Memory button to. To quickly find and safely remove cache files on your Mac, you can use a special software tool: MacCleaner Pro. The app scans your hard drive and automatically finds all the cache files in just a few seconds. Then, all you need to do to remove caches is to select them from the Clean up Mac section and click the Clean Up button.

- How Do I Free Up Memory On My Apple Watch

- How Do I Free Up Application Memory On My Mac

- How Do I Free Up Memory On My Mac Mini

Memory (RAM) and storage (hard disk / SSD) are not related to one another.

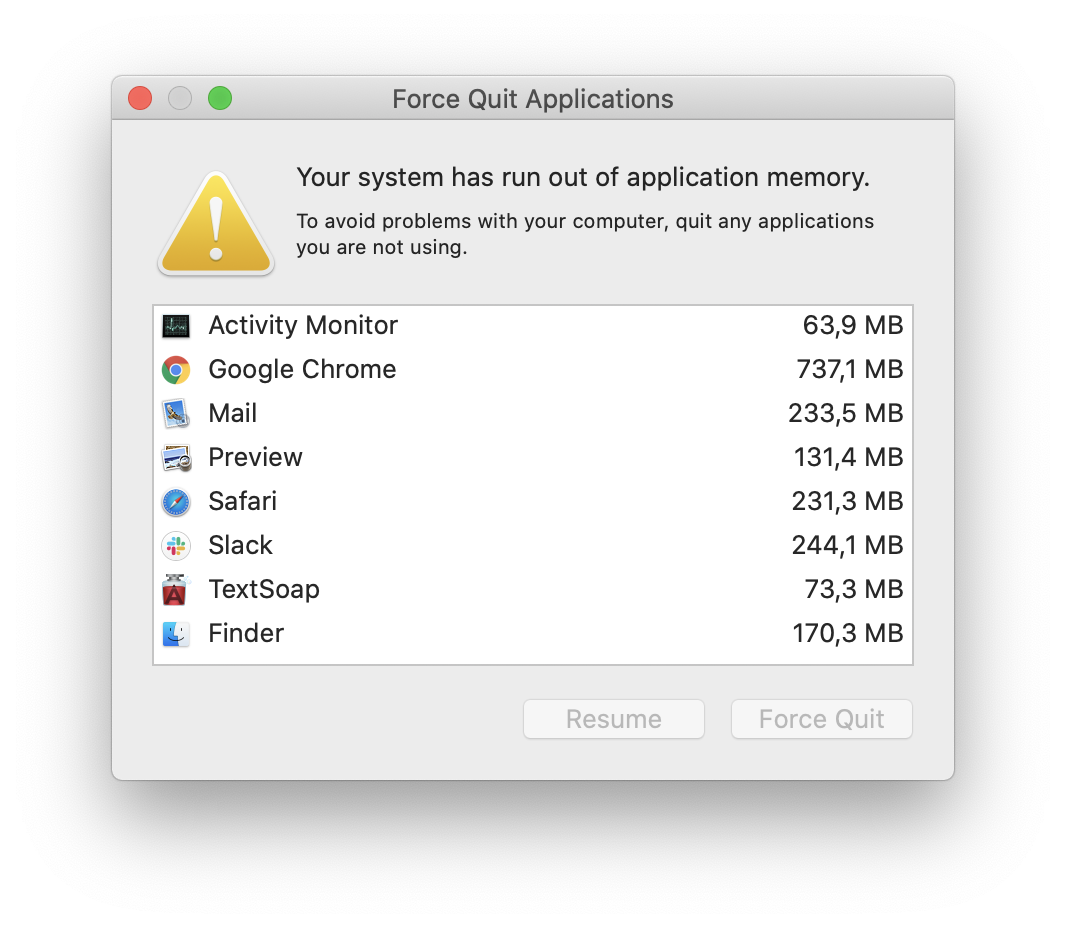

That Mac has plenty of available storage. If you are running low on memory Activity Monitor can be used to identify the memory-intensive processes causing that warning.

To learn how to use Activity Monitor please read the Activity Monitor User Guide. For memory usage, refer to View memory usage in Activity Monitor on Mac.

Once you determine the memory-intensive process or processes, a solution can be provided. WIthout that information it is premature to draw any conclusions, but the number one explanation for that warning is having inadvertently installed adware. To learn how to recognize adware so that you do not install it, please read How to install adware - Apple Community.

Jan 1, 2019 7:49 AM

With CleanMyMac X installed on your system you will get a Heavt memory usage alert if your Mac is running out of free RAM. Just click on the Free Up button to release some of the RAM and speed. Click on the application icon, select 'Get info' from the 'File' menu, and adjust the memory allocation in the window that pops up.

Memory is an important resource for your application so it’s important to think about how your application will use memory and what might be the most efficient allocation approaches. Most applications do not need to do anything special; they can simply allocate objects or memory blocks as needed and not see any performance degradation. For applications that use large amount of memory, however, carefully planning out your memory allocation strategy could make a big difference.

The following sections describe the basic options for allocating memory along with tips for doing so efficiently. To determine if your application has memory performance problems in the first place, you need to use the Xcode tools to look at your application’s allocation patterns while it is running. For information on how to do that, see Tracking Memory Usage.

Tips for Improving Memory-Related Performance

As you design your code, you should always be aware of how you are using memory. Because memory is an important resource, you want to be sure to use it efficiently and not be wasteful. Besides allocating the right amount of memory for a given operation, the following sections describe other ways to improve the efficiency of your program’s memory usage.

Defer Your Memory Allocations

Every memory allocation has a performance cost. That cost includes the time it takes to allocate the memory in your program’s logical address space and the time it takes to assign that address space to physical memory. If you do not plan to use a particular block of memory right away, deferring the allocation until the time when you actually need it is the best course of action. For example, to avoid the appearance of your app launching slowly, minimize the amount of memory you allocate at launch time. Instead, focus your initial memory allocations on the objects needed to display your user interface and respond to input from the user. Defer other allocations until the user issues starts interacting with your application and issuing commands. This lazy allocation of memory saves time right away and ensures that any memory that is allocated is actually used.

Once place where lazy initialization can be somewhat tricky is with global variables. Because they are global to your application, you need to make sure global variables are initialized properly before they are used by the rest of your code. The basic approach often taken with global variables is to define a static variable in one of your code modules and use a public accessor function to get and set the value, as shown in Listing 1.

Listing 1 Lazy allocation of memory through an accessor

The only time you have to be careful with code of this sort is when it might be called from multiple threads. In a multithreaded environment, you need to use locks to protect the if statement in your accessor method. The downside to that approach though is that acquiring the lock takes a nontrivial amount of time and must be done every time you access the global variable, which is a performance hit of a different kind. A simpler approach would be to initialize all global variables from your application’s main thread before it spawns any additional threads.

Initialize Memory Blocks Efficiently

Small blocks of memory, allocated using the malloc function, are not guaranteed to be initialized with zeroes. Although you could use the memset function to initialize the memory, a better choice is to use the calloc routine to allocate the memory in the first place. The calloc function reserves the required virtual address space for the memory but waits until the memory is actually used before initializing it. This approach is much more efficient than using memset, which forces the virtual memory system to map the corresponding pages into physical memory in order to zero-initialize them. Another advantage of using the calloc function is that it lets the system initialize pages as they’re used, as opposed to all at once.

Reuse Temporary Memory Buffers

If you have a highly-used function that creates a large temporary buffer for some calculations, you might want to consider reusing that buffer rather than reallocating it each time you call the function. Even if your function needs a variable buffer space, you can always grow the buffer as needed using the realloc function. For multithreaded applications, the best way to reuse buffers is to add them to your thread-local storage. Although you could store the buffer using a static variable in your function, doing so would prevent you from using that function on multiple threads at the same time.

Caching buffers eliminates much of the overhead for functions that regularly allocate and free large blocks of memory. However, this technique is only appropriate for functions that are called frequently. Also, you should be careful not to cache too many large buffers. Caching buffers does add to the memory footprint of your application and should only be used if testing indicates it would yield better performance.

Free Unused Memory

For memory allocated using the malloc library, it is important to free up memory as soon as you are done using it. Forgetting to free up memory can cause memory leaks, which reduces the amount of memory available to your application and impacts performance. Left unchecked, memory leaks can also put your application into a state where it cannot do anything because it cannot allocate the required memory.

Note: Applications built using the Automatic Reference Counting (ARC) compiler option do not need to release Objective-C objects explicitly. Instead, the app must store strong references to objects it wants to keep and remove references to objects it does not need. When an object does not have any strong references to it, the compiler automatically releases it. For more information about supporting ARC, see Transitioning to ARC Release Notes.

No matter which platform you are targeting, you should always eliminate memory leaks in your application. For code that uses malloc, remember that being lazy is fine for allocating memory but do not be lazy about freeing up that memory. To help track down memory leaks in your applications, use the Instruments app.

Memory Allocation Techniques

Because memory is such a fundamental resource, OS X and iOS both provide several ways to allocate it. Which allocation techniques you use will depend mostly on your needs, but in the end all memory allocations eventually use the malloc library to create the memory. Even Cocoa objects are allocated using the malloc library eventually. The use of this single library makes it possible for the performance tools to report on all of the memory allocations in your application.

If you are writing a Cocoa application, you might allocate memory only in the form of objects using the alloc method of NSObject. Even so, there may be times when you need to go beyond the basic object-related memory blocks and use other memory allocation techniques. For example, you might allocate memory directly using malloc in order to pass it to a low-level function call.

The following sections provide information about the malloc library and virtual memory system and how they perform allocations. The purpose of these sections is to help you identify the costs associated with each type of specialized allocation. You should use this information to optimize memory allocations in your code.

Note: These sections assume you are using the system supplied version of the malloc library to do your allocations. If you are using a custom malloc library, these techniques may not apply.

Allocating Objects

For Objective-C based applications, you allocate objects using one of two techniques. You can either use the alloc class method, followed by a call to a class initialization method, or you can use the new class method to allocate the object and call its default init method in one step.

After creating an object, the compiler’s ARC feature determines the lifespan of an object and when it should be deleted. Every new object needs at least one strong reference to it to prevent it from being deallocated right away. Therefore, when you create a new object, you should always create at least one strong reference to it. After that, you may create additional strong or weak references depending on the needs of your code. When all strong references to an object are removed, the compiler automatically deallocates it.

For more information about ARC and how you manage the lifespan of objects, see Transitioning to ARC Release Notes.

Allocating Small Memory Blocks Using Malloc

For small memory allocations, where small is anything less than a few virtual memory pages, malloc sub-allocates the requested amount of memory from a list (or “pool”) of free blocks of increasing size. Any small blocks you deallocate using the free routine are added back to the pool and reused on a “best fit” basis. The memory pool itself is comprised of several virtual memory pages that are allocated using the vm_allocate routine and managed for you by the system.

When allocating any small blocks of memory, remember that the granularity for blocks allocated by the malloc library is 16 bytes. Thus, the smallest block of memory you can allocate is 16 bytes and any blocks larger than that are a multiple of 16. For example, if you call malloc and ask for 4 bytes, it returns a block whose size is 16 bytes; if you request 24 bytes, it returns a block whose size is 32 bytes. Because of this granularity, you should design your data structures carefully and try to make them multiples of 16 bytes whenever possible.

Note: By their nature, allocations smaller than a single virtual memory page in size cannot be page aligned.

How Do I Free Up Memory On My Apple Watch

Allocating Large Memory Blocks using Malloc

For large memory allocations, where large is anything more than a few virtual memory pages, malloc automatically uses the vm_allocate routine to obtain the requested memory. The vm_allocate routine assigns an address range to the new block in the logical address space of the current process, but it does not assign any physical memory to those pages right away. Instead, the kernel does the following:

It maps a range of memory in the virtual address space of this process by creating a map entry; the map entry is a simple structure that defines the starting and ending addresses of the region.

The range of memory is backed by the default pager.

The kernel creates and initializes a VM object, associating it with the map entry.

At this point there are no pages resident in physical memory and no pages in the backing store. Everything is mapped virtually within the system. When your code accesses part of the memory block, by reading or writing to a specific address in it, a fault occurs because that address has not been mapped to physical memory. In OS X, the kernel also recognizes that the VM object has no backing store for the page on which this address occurs. The kernel then performs the following steps for each page fault:

It acquires a page from the free list and fills it with zeroes.

It inserts a reference to this page in the VM object’s list of resident pages.

It maps the virtual page to the physical page by filling in a data structure called the pmap. The pmap contains the page table used by the processor (or by a separate memory management unit) to map a given virtual address to the actual hardware address.

The granularity of large memory blocks is equal to the size of a virtual memory page, or 4096 bytes. In other words, any large memory allocations that are not a multiple of 4096 are rounded up to this multiple automatically. Thus, if you are allocating large memory buffers, you should make your buffer a multiple of this size to avoid wasting memory.

Note: Large memory allocations are guaranteed to be page-aligned.

For large allocations, you may also find that it makes sense to allocate virtual memory directly using vm_allocate, rather than using malloc. The example in Listing 2 shows how to use the vm_allocate function.

Listing 2 Allocating memory with vm_allocate

Allocating Memory in Batches

If your code allocates multiple, identically-sized memory blocks, you can use the malloc_zone_batch_malloc function to allocate those blocks all at once. This function offers better performance than a series of calls to malloc to allocate the same memory. Performance is best when the individual block size is relatively small—less than 4K in size. The function does its best to allocate all of the requested memory but may return less than was requested. When using this function, check the return values carefully to see how many blocks were actually allocated.

Batch allocation of memory blocks is supported in OS X version 10.3 and later and in iOS. For information, see the /usr/include/malloc/malloc.h header file.

Allocating Shared Memory

Shared memory is memory that can be written to or read from by two or more processes. Shared memory can be inherited from a parent process, created by a shared memory server, or explicitly created by an application for export to other applications. Uses for shared memory include the following:

Sharing large resources such as icons or sounds

Fast communication between one or more processes

Shared memory is fragile and is generally not recommended when other, more reliable alternatives are available. If one program corrupts a section of shared memory, any programs that also use that memory share the corrupted data. The functions used to create and manage shared memory regions are in the /usr/include/sys/shm.h header file.

Using Malloc Memory Zones

All memory blocks are allocated within a malloc zone (also referred to as a malloc heap). A zone is a variable-size range of virtual memory from which the memory system can allocate blocks. A zone has its own free list and pool of memory pages, and memory allocated within the zone remains on that set of pages. Zones are useful in situations where you need to create blocks of memory with similar access patterns or lifetimes. You can allocate many objects or blocks of memory in a zone and then destroy the zone to free them all, rather than releasing each block individually. In theory, using a zone in this way can minimize wasted space and reduce paging activity. In reality, the overhead of zones often eliminates the performance advantages associated with the zone.

Note: The term zone is synonymous with the terms heap, pool, and arena in terms of memory allocation using the malloc routines.

By default, allocations made using the malloc function occur within the default malloc zone, which is created when malloc is first called by your application. Although it is generally not recommended, you can create additional zones if measurements show there to be potential performance gains in your code. For example, if the effect of releasing a large number of temporary (and isolated) objects is slowing down your application, you could allocate them in a zone instead and simply deallocate the zone.

If you are create objects (or allocate memory blocks) in a custom malloc zone, you can simply free the entire zone when you are done with it, instead of releasing the zone-allocated objects or memory blocks individually. When doing so, be sure your application data structures do not hold references to the memory in the custom zone. Attempting to access memory in a deallocated zone will cause a memory fault and crash your application.

Warning: You should never deallocate the default zone for your application.

At the malloc library level, support for zones is defined in /usr/include/malloc/malloc.h. Use the malloc_create_zone function to create a custom malloc zone or the malloc_default_zone function to get the default zone for your application. To allocate memory in a particular zone, use the malloc_zone_malloc , malloc_zone_calloc , malloc_zone_valloc , or malloc_zone_realloc functions. To release the memory in a custom zone, call malloc_destroy_zone.

Copying Memory Using Malloc

There are two main approaches to copying memory in OS X: direct and delayed. For most situations, the direct approach offers the best overall performance. However, there are times when using a delayed-copy operation has its benefits. The goal of the following sections is to introduce you to the different approaches for copying memory and the situations when you might use those approaches.

Copying Memory Directly

The direct copying of memory involves using a routine such as memcpy or memmove to copy bytes from one block to another. Both the source and destination blocks must be resident in memory at the time of the copy. However, these routines are especially suited for the following situations:

The size of the block you want to copy is small (under 16 kilobytes).

You intend to use either the source or destination right away.

The source or destination block is not page aligned.

The source and destination blocks overlap.

If you do not plan to use the source or destination data for some time, performing a direct copy can decrease performance significantly for large memory blocks. Copying the memory directly increases the size of your application’s working set. Whenever you increase your application’s working set, you increase the chances of paging to disk. If you have two direct copies of a large memory block in your working set, you might end up paging them both to disk. When you later access either the source or destination, you would then need to load that data back from disk, which is much more expensive than using vm_copy to perform a delayed copy operation.

Note: If the source and destination blocks overlap, you should prefer the use of memmove over memcpy. The implementation of memmove handles overlapping blocks correctly in OS X, but the implementation of memcpy is not guaranteed to do so.

Delaying Memory Copy Operations

If you intend to copy many pages worth of memory, but don’t intend to use either the source or destination pages immediately, then you may want to use the vm_copy function to do so. Unlike memmove or memcpy, vm_copy does not touch any real memory. It modifies the virtual memory map to indicate that the destination address range is a copy-on-write version of the source address range.

The vm_copy routine is more efficient than memcpy in very specific situations. Specifically, it is more efficient in cases where your code does not access either the source or destination memory for a fairly large period of time after the copy operation. The reason that vm_copy is effective for delayed usage is the way the kernel handles the copy-on-write case. In order to perform the copy operation, the kernel must remove all references to the source pages from the virtual memory system. The next time a process accesses data on that source page, a soft fault occurs, and the kernel maps the page back into the process space as a copy-on-write page. The process of handling a single soft fault is almost as expensive as copying the data directly.

Copying Small Amounts of Data

If you need to copy a small blocks of non-overlapping data, you should prefer memcpy over any other routines. For small blocks of memory, the GCC compiler can optimize this routine by replacing it with inline instructions to copy the data by value. The compiler may not optimize out other routines such as memmove or BlockMoveData.

Copying Data to Video RAM

When copying data into VRAM, use the BlockMoveDataUncachedfunction instead of functions such as bcopy. The bcopy function uses cache-manipulation instructions that may cause exception errors. The kernel must fix these errors in order to continue, which slows down performance tremendously.

Responding to Low-Memory Warnings in iOS

The virtual memory system in iOS does not use a backing store and instead relies on the cooperation of applications to remove strong references to objects. When the number of free pages dips below the computed threshold, the system releases unmodified pages whenever possible but may also send the currently running application a low-memory notification. If your application receives this notification, heed the warning. Upon receiving it, your application must remove strong references to as many objects as possible. For example, you can use the warnings to clear out data caches that can be recreated later.

UIKit provides several ways to receive low-memory notifications, including the following:

Implement the

applicationDidReceiveMemoryWarning:method of your application delegate.Override the

didReceiveMemoryWarningmethod in your customUIViewControllersubclass.Register to receive the

UIApplicationDidReceiveMemoryWarningNotificationnotification.

How Do You Alacote More Memory For A Mac Program Downloads

Upon receiving any of these notifications, your handler method should respond by immediately removing strong references to objects. View controllers automatically remove references to views that are currently offscreen, but you should also override the didReceiveMemoryWarning method and use it to remove any additional references that your view controller does not need.

If you have only a few custom objects with known purgeable resources, you can have those objects register for the UIApplicationDidReceiveMemoryWarningNotification notification and remove references there. If you have many purgeable objects or want to selectively purge only some objects, however, you might want to use your application delegate to decide which objects to keep.

Important: Like the system applications, your applications should always handle low-memory warnings, even if they do not receive those warnings during your testing. System applications consume small amounts of memory while processing requests. When a low-memory condition is detected, the system delivers low-memory warnings to all running programs (including your application) and may terminate some background applications (if necessary) to ease memory pressure. If not enough memory is released—perhaps because your application is leaking or still consuming too much memory—the system may still terminate your application.

Copyright © 2003, 2013 Apple Inc. All Rights Reserved. Terms of Use | Privacy Policy | Updated: 2013-04-23

Alternative solution

How Do You Alacote More Memory For A Mac Program Cost

The Code42 app only uses the maximum memory allotted if it is needed. However, if the maximum memory supported by your device does not accommodate the size of your backup file selection, there are several alternative ways to reduce memory usage.

How Do I Free Up Application Memory On My Mac

How Do You Alacoque More Memory For A Mac Program Downloads

Remove system and application files from your backup selection

The Code42 app is designed to back up user files (photos, documents, etc.). It is not designed to back up your operating system or application files. When system and application files are included in your backup file selection, the Code42 app uses more resources, like memory, backing up these files. Review our guidelines for choosing what to back up for more information.

How Do I Free Up Memory On My Mac Mini

Reduce backup frequency

The Code42 app monitors changes to files in real time based on your backup frequency and versions settings. More frequent backups or large numbers of files require the Code42 app to use more system resources to process your backup. You can reduce the frequency of the New Versions scan to conserve memory.

Reduce CPU allocated to the Code42 app

You can control the amount of CPU processing time the Code42 app is allowed to use both when you are present (working on your device) and away. Most modern devices can support allowing the Code42 app to use a high percentage of CPU time without experiencing any performance issues, but you may want to adjust these settings for older devices. You can adjust these settings from the Code42 app.

CPU settings apply to the amount of CPU processing time dedicated to Code42 app work, not to total CPU processing capacity. Therefore, while % CPU may be set at 20, for example, a system monitor may show that 70% of CPU capacity is used for the Code42 app at a particular point in time. For more information, see Configure CPU usage for backup in the Code42 console.

- Sign in to the Code42 app.

- Navigate to Device Preferences:

- Code42 app version 6.8.3 and later: Select Settings.

- Code42 app version 6.8.2 and earlier:

- Click Details.

- Select the arrow next to your device name.

- Choose Device preferences.

- Click Usage.

- Reduce the percent of time CPU is allowed to use when the user is both away and present.

Schedule backup

Finally, if you are still experiencing performance issues related to memory, you can configure the Code42 app to back up only at specified times. However, be careful to set a schedule that allows the Code42 app to run while your device is on and not in standby mode. You can adjust your backup schedule from the Code42 app.

- Sign in to the Code42 app.

- Navigate to Backup Set Settings.

- Code42 app version 6.8.3 and later:

- Select .

- Select Backup Sets.

- Code42 app version 6.8.2 and earlier:

- Select Details.

- Select the action menu.

- Next to Backup Schedule, click Change.

- Select Only run between specified times.

- Select the days and hours when the Code42 app is allowed to back up.

- Click Save.

Reduce your file selection

If you experience issues related to the Code42 app's memory usage after adjusting the settings described above, you may need to remove files from your selection to reduce the amount of memory needed to run the Code42 app.

Deselecting files from your backup file selection permanently deletes them from your backup archive. You cannot download files that have been removed from your backup file selection.